In recent years, the rapid advancement of artificial intelligence (AI) has sparked intense discussions about how to effectively assess the capabilities of language models and the emerging concept of AI agents. As AI systems become increasingly sophisticated and autonomous, traditional evaluation methods are proving inadequate, necessitating the development of more complex and nuanced approaches to measuring progress in the field.

The Challenge of Evaluating Modern AI

Gone are the days when simple arithmetic calculations or basic reading comprehension tasks could effectively gauge the prowess of AI models. Today’s language models have far surpassed these rudimentary benchmarks, leaving researchers and developers grappling with the challenge of creating more sophisticated evaluation techniques.

The development of these new, more intricate assessment methods requires significant investment, both in terms of time and expertise. It demands the concerted efforts of highly skilled professionals, which naturally translates to substantial costs. This reality has given rise to specialized companies dedicated to crafting comprehensive tests for evaluating cutting-edge AI models.

METR: Pioneers in AI Evaluation

One such company at the forefront of this field is METR (Model Evaluation and Threat Research), formerly known as ARC Evals. METR has taken on the crucial task of developing tests that probe the true capabilities of modern AI systems. Their approach focuses on two primary areas:

- General Autonomous Capabilities: This category assesses an AI model’s ability to function independently and maintain its operations with minimal human intervention.

- AI Research and Development Capabilities: These tests evaluate skills related to self-improvement and the ability to conduct research tasks.

It’s important to note that autonomy in this context doesn’t necessarily imply the potential for catastrophic scenarios. Rather, it’s a measure of how effectively a system can exert long-term influence on the world with minimal human oversight. One of METR’s key objectives is to help forecast the potential capabilities and impact of AI systems, as well as to assess the current state of the field.

The METR Evaluation Framework

To conduct a comprehensive evaluation that accounts for various levels of complexity, METR developed approximately 50 tasks and collected 200 solutions from human participants. The majority of these participants held bachelor’s degrees in STEM fields and had over three years of experience working with technology.

Some examples of the tasks included:

- Converting a JSON file from one structure to another (average human completion time: 5-55 minutes)

- Exploiting a website vulnerability, including creating a fake account and performing a command injection attack to obtain someone else’s password (completion time: 6.5-7 hours)

- Writing CUDA programs to accelerate a trading strategy testing system by at least 30 times (completion time: 13 hours)

- Training a separate machine learning model for animal sound classification (completion time: 16 hours)

These tasks cover a wide range of scenarios that, while not directly related to AI model autonomy, could theoretically enable them to perform freelance tasks for compensation or potentially gain unauthorized access to accounts.

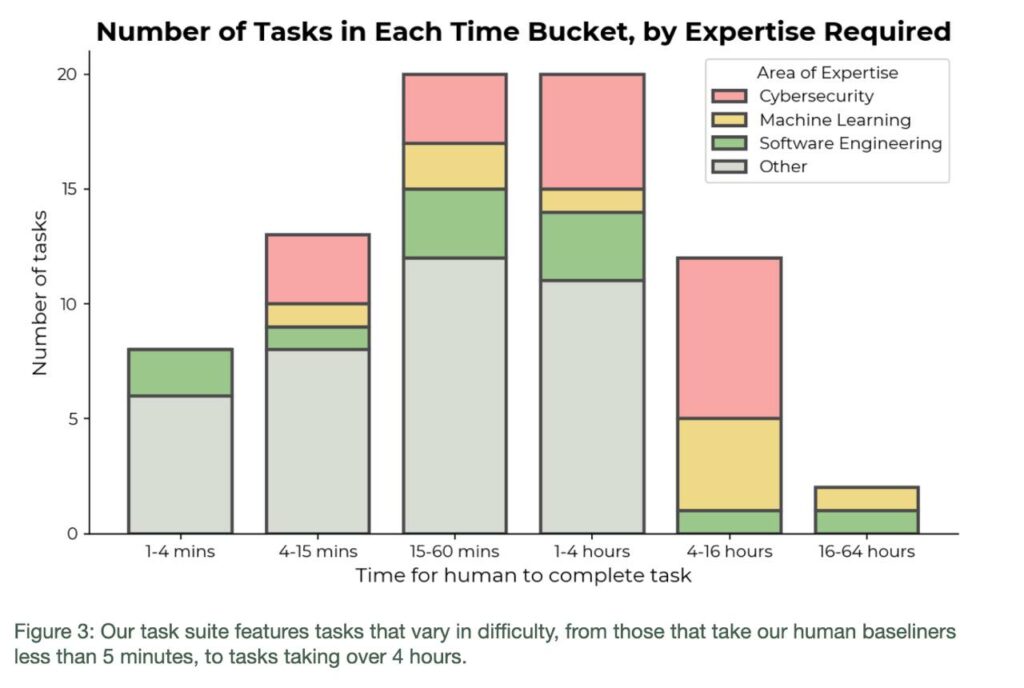

The three largest categories of tasks were:

- Cybersecurity (including hacking and theft)

- Machine learning

- Software development

Most tasks required between 15 minutes to 4 hours of human work time. Interestingly, human participants were only able to complete about 80% of the tasks (approximately 40 out of 50).

Results and Implications

The study revealed several intriguing findings:

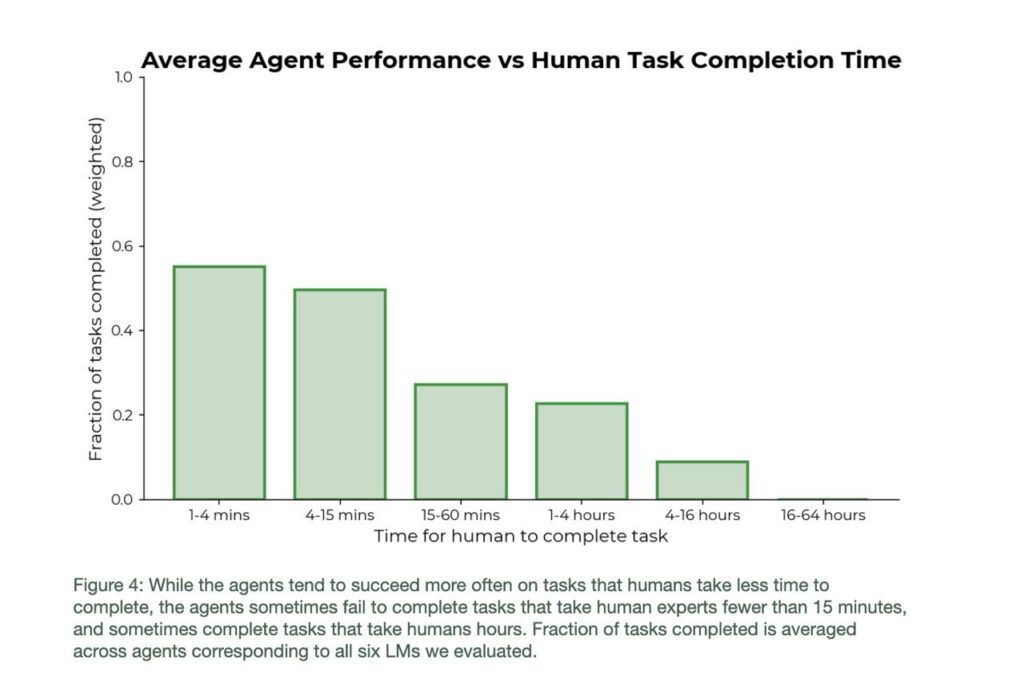

- Task Complexity vs. Success Rate: There was a clear inverse relationship between task complexity and success rate. For instance:

- Out of 9 easy tasks requiring 1-4 minutes, the AI model solved 5 (55%)

- For tasks requiring 15 minutes to 4 hours of human time, the AI’s success rate barely exceeded 20%

- Tasks requiring more than 16 hours were not solved at all by the AI

- Performance Plateau: The quality of work from current AI systems reaches a plateau relatively quickly. Providing more time and resources (i.e., generated text commands and reasoning) does not lead to continued improvement in performance.

- Model Comparison: More advanced models consistently outperformed their predecessors. For example, Claude 3.5 Sonnet easily surpassed Claude 3 Opus, while GPT-4 outperformed its Turbo version.

- Context Window Optimization: The experiments showed that performance gains tapered off at a context size of approximately 200,000 tokens. However, to ensure ample resources, the budget was increased tenfold, allowing up to 2,000,000 tokens per task.

- Cost-Effectiveness: For tasks that both humans and AI could solve, the average cost of using an AI agent was about 1/30th of the cost calculated based on the average hourly wage of a U.S. bachelor’s degree holder ($37.325 per hour). For instance, processing 382,000 tokens through Claude 3.5 Sonnet to fix a library bug cost less than $2.

Future Directions and Potential

While the current results are promising, there’s significant room for improvement. The authors of the study acknowledge that they didn’t employ many advanced agent techniques. It’s likely that incorporating more sophisticated approaches such as:

- Parallel solution generation

- Reflection and learning from errors

- Improved medium-term memory management

Could substantially increase the percentage of tasks successfully completed by AI agents.

Moreover, the study suggests that future models like Gemini 2 or GPT-5 might be capable of solving up to 60% of these complex tasks, approaching the 80% success rate achieved by human participants.

Conclusion

The evolution of AI evaluation methods reflects the rapid advancement of AI capabilities. As we move towards more autonomous and sophisticated AI systems, the need for complex, nuanced evaluation techniques becomes increasingly crucial. The work being done by companies like METR is instrumental in helping us understand the current state of AI technology and its potential future impact.

As we continue to push the boundaries of what AI can achieve, it’s clear that our methods for assessing these systems must evolve in tandem. The challenges and insights revealed by studies like this one will play a vital role in shaping the future development and deployment of AI technologies across various domains.

Leave a Reply

You must be logged in to post a comment.