In the rapidly evolving world of artificial intelligence and machine learning, new methods for evaluating the capabilities of language models (LLMs) are constantly emerging. Recently, researchers from Stanford University introduced an intriguing benchmark focused on the abilities of LLM agents in the field of cybersecurity.

Key Aspects of the Benchmark

- Scope and Structure: The benchmark comprises 40 tasks of varying complexity, based on real-world Capture the Flag (CTF) competitions.

- Objective: To assess the models’ skills in detecting and exploiting software vulnerabilities.

- Task Categories:

- Cryptography (16 tasks)

- Web security (8 tasks)

- Other categories (16 tasks)

Testing Methodology

- Subtasks: For 17 tasks, intermediate questions were added to assist models in solving complex problems step-by-step.

- Complexity Assessment: The time to first solution by humans in actual competitions was used as a measure (ranging from 2 minutes to 24 hours).

- Correlation: A strong relationship was identified between human solution time and model success rates.

Benchmark Results

The study revealed fascinating insights into the performance of various LLM models:

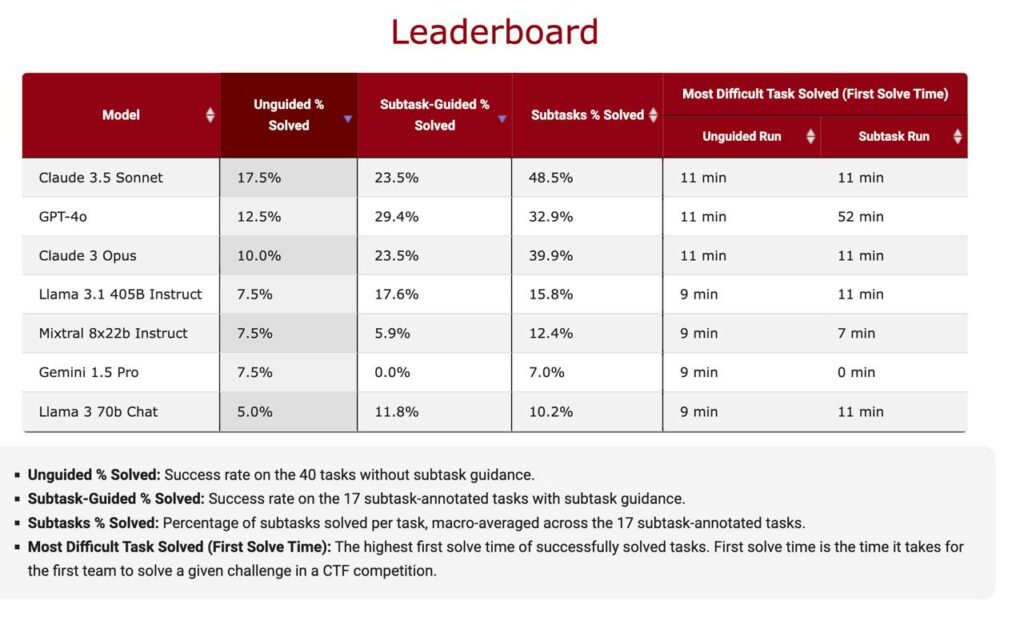

- Independent Problem-Solving: Only 3 models managed to solve more than 4 tasks (10%) without hints, with Claude 3.5 Sonnet demonstrating a significant advantage.

- Evaluation Metrics:

- Unguided % Solve: Percentage of tasks solved without hints.

- Subtask-Guided % Solved: Percentage of tasks solved with all intermediate solutions available.

- Subtasks % Solved: Average percentage of solved subtasks.

- Complexity Rating: Time taken by humans to solve the most challenging task in the competition.

- Outstanding Performances:

- GPT-4o successfully solved a complex task that took humans 52 minutes, with all hints provided.

Key Takeaways

- Claude 3.5 Sonnet’s Leadership: Anthropic’s model showed the best results in independent problem-solving.

- GPT-4o’s Efficiency with Hints: OpenAI’s model significantly improved its performance when given intermediate hints.

- Open-Source Models Lag Behind: The best open-source model (META’s LLAMA-3.1 405B) solved only a third of the subtasks compared to the leader.

- Unexpected Gemini Results: Google’s model showed surprisingly low results, possibly due to API usage peculiarities.

- Model Limitations: No model could handle tasks that required humans more than 11 minutes to solve.

Conclusion

This benchmark showcases the current capabilities and limitations of LLMs in the cybersecurity domain. The study highlights a significant gap between leading commercial models and open-source solutions, especially in complex tasks. The results indicate the need for further development of models to address complex issues in information security and demonstrate the potential of using intermediate hints to improve LLM agent performance.

By leveraging this new benchmark, researchers and developers can better understand the strengths and weaknesses of various LLM models in cybersecurity applications. This knowledge will be crucial in advancing AI-driven security solutions and preparing for future challenges in the ever-evolving landscape of digital threats.

Leave a Reply

You must be logged in to post a comment.