Building autonomous agents powered by Large Language Models (LLMs) as their primary control system is a fascinating concept. Several proof-of-concept demonstrations, such as AutoGPT, GPT-Engineer, and BabyAGI, illustrate this potential. The capabilities of LLMs extend far beyond generating well-crafted text and code; they can be harnessed as formidable general problem solvers.

Overview of the Agent System

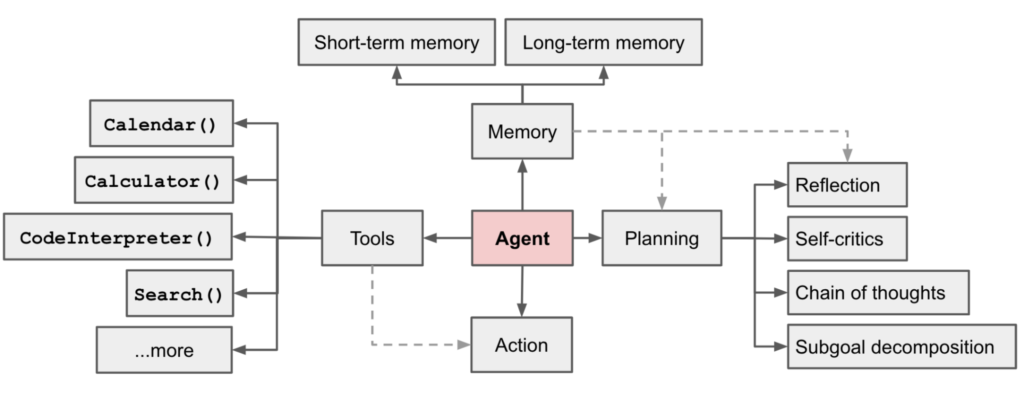

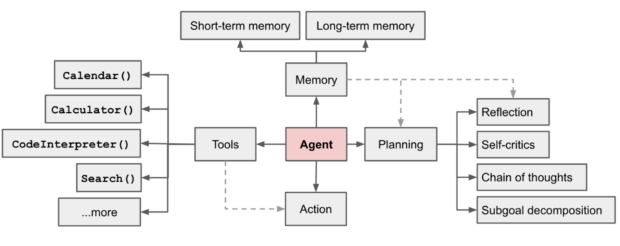

In an LLM-powered autonomous agent framework, the LLM acts as the brain of the agent, supported by several essential components:

Planning

- Subgoal and Breakdown: The agent divides large tasks into smaller, manageable subgoals, allowing for efficient management of complex problems.

- Reflection and Refinement: The agent can engage in self-evaluation and learn from previous actions, refining its approach for future tasks, thereby enhancing the quality of its outcomes.

Memory

- Short-term Memory: This involves using in-context learning to handle tasks within the model’s current session.

- Long-term Memory: Enables the agent to store and retrieve vast amounts of information over extended periods, often utilizing an external vector database for swift access.

Tool Utilization

The agent learns to access external APIs to acquire additional information beyond its model parameters, such as up-to-date data, code execution capabilities, and access to specialized data sources.

Component One: Planning

Handling complex tasks often involves multiple steps, necessitating the agent’s ability to identify and plan these steps ahead of time.

Task Breakdown

The “Chain of Thought” (CoT) method (Wei et al., 2022) is a widely used prompting technique to boost model performance on complex tasks. This approach encourages the model to “think step by step,” breaking down difficult tasks into smaller, more straightforward steps, thereby illuminating the model’s reasoning process.

“Tree of Thoughts” (Yao et al., 2023) expands upon CoT by exploring various reasoning paths at each step. It breaks down a problem into multiple stages of thought, generating different possibilities for each, creating a tree-like structure. This search can be conducted using BFS (breadth-first search) or DFS (depth-first search), with each state evaluated using a classifier or a majority vote.

Task breakdown can be achieved by:

- Prompting the LLM with simple instructions like “Steps for XYZ” or “Subgoals for achieving XYZ?”

- Using task-specific prompts such as “Write a story outline” for novel writing.

- Integrating human input.

An alternative approach, LLM+P (Liu et al., 2023), involves using an external classical planner for long-term planning. This technique employs the Planning Domain Definition Language (PDDL) to describe planning problems. Here, the LLM:

- Converts the problem into “Problem PDDL.”

- Requests a classical planner to devise a PDDL plan based on an existing “Domain PDDL.”

- Translates the PDDL plan back into natural language.

This approach outsources planning to an external tool, assuming the availability of domain-specific PDDL and a suitable planner, which is common in robotics but less so in other domains.

Self-Reflection

Self-reflection enables autonomous agents to iteratively improve by evaluating past actions and correcting mistakes. It is crucial in real-world scenarios where trial and error are inevitable.

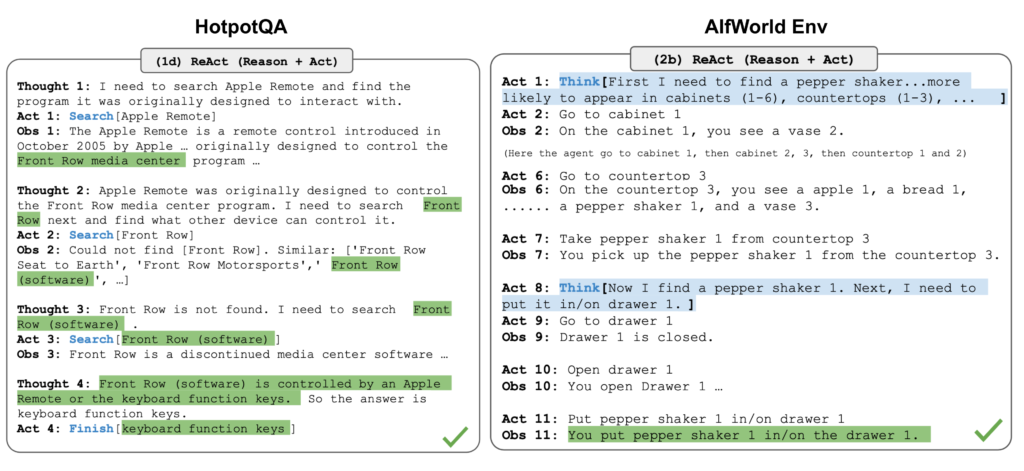

“ReAct” (Yao et al., 2023) combines reasoning and acting within LLMs by expanding the action space to include both task-specific actions and language space. This allows LLMs to interact with their environment (e.g., using a Wikipedia search API) while generating reasoning traces in natural language.

The ReAct prompt structure typically follows this format:

- Thought: …

- Action: …

- Observation: …

- (Repeated)

In experiments with knowledge-intensive and decision-making tasks, ReAct outperformed the “Act-only” baseline, which omitted the Thought: … step.

“Reflexion” (Shinn & Labash, 2023) equips agents with dynamic memory and self-reflection capabilities to enhance reasoning skills. It uses a standard reinforcement learning (RL) setup with a binary reward model. The agent’s action space follows the ReAct framework, enabling complex reasoning steps.

Component Two: Memory

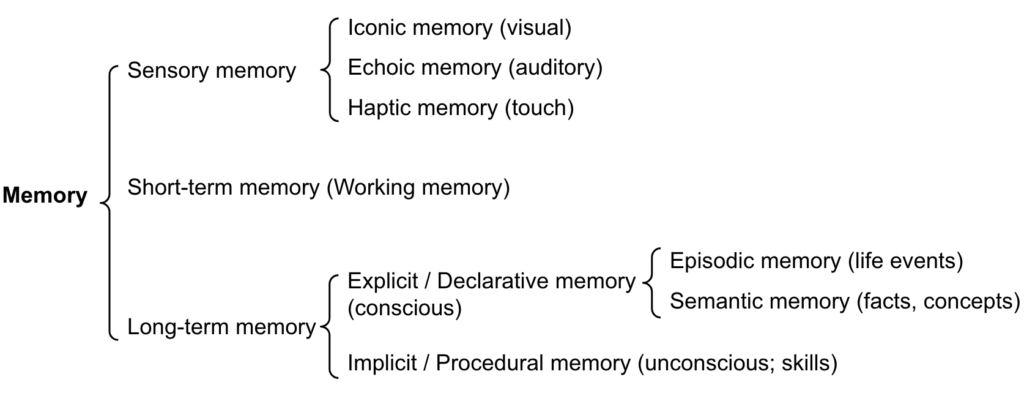

Memory encompasses the processes of acquiring, storing, retaining, and retrieving information. Several memory types exist in the human brain:

- Sensory Memory: This initial stage retains sensory impressions (visual, auditory, etc.) briefly after stimuli have ceased. Subtypes include iconic (visual), echoic (auditory), and haptic (touch) memory.

- Short-Term Memory (STM)/Working Memory: Stores information currently in use, facilitating tasks like learning and reasoning. STM is believed to hold around 7 items (Miller, 1956) and lasts for 20-30 seconds.

- Long-Term Memory (LTM): Stores information over extended periods, from days to decades, with virtually unlimited capacity. Subtypes include:

- Explicit/Declarative Memory: Conscious recall of facts and events, including episodic (events/experiences) and semantic (facts/concepts) memory.

- Implicit/Procedural Memory: Unconscious skills and routines, like biking or typing.

LLMs map these types of memory to their systems:

- Sensory Memory: Learning embeddings for raw inputs (text, images, etc.).

- Short-term Memory: In-context learning within the limited context window.

- Long-term Memory: External vector storage accessed via fast retrieval.

Maximum Inner Product Search (MIPS)

External memory can alleviate the limitations of finite attention spans. Embedding representations are stored in a vector database for rapid maximum inner-product search (MIPS). To optimize retrieval speed, Approximate Nearest Neighbors (ANN) algorithms balance accuracy with speed:

- Locality-Sensitive Hashing (LSH): Uses hashing functions to map similar inputs to the same buckets.

- ANNOY: Employs random projection trees for efficient search.

- Hierarchical Navigable Small World (HNSW): Uses small-world network principles for fast searching.

- FAISS: Clusters data points based on Gaussian distribution assumptions.

- ScaNN: Uses anisotropic vector quantization to maintain inner product similarity.

Component Three: Tool Utilization

Tool use is a defining characteristic of humans, allowing us to transcend physical and cognitive limitations. Equipping LLMs with external tools can significantly enhance their capabilities.

MRKL (Karpas et al., 2022) is a neuro-symbolic architecture for autonomous agents. It features a collection of “expert” modules, with the LLM routing inquiries to the most suitable module. These modules can be neural or symbolic, such as a math calculator or weather API.

Experiments fine-tuning LLMs to use calculators demonstrated that verbal math problems were harder to solve than explicit ones, highlighting the importance of knowing when and how to use external tools.

TALM (Tool Augmented Language Models; Parisi et al., 2022) and Toolformer (Schick et al., 2023) fine-tune LMs to learn using external tool APIs. ChatGPT Plugins and OpenAI API function calling exemplify practical applications of LLMs with tool capabilities.

HuggingGPT (Shen et al., 2023) uses ChatGPT to select models from the HuggingFace platform based on model descriptions and summarize results.

The system involves four stages:

- Task Planning: LLM parses user requests into tasks with attributes like task type, ID, dependencies, and arguments.

- Model Selection: LLM distributes tasks to expert models using a multiple-choice framework.

- Task Execution: Expert models perform tasks and log results.

- Response Generation: LLM summarizes results for users.

To implement HuggingGPT, challenges include improving efficiency, managing long context windows, and stabilizing outputs.

API-Bank (Li et al., 2023) benchmarks tool-augmented LLMs’ performance. It features 53 API tools and 264 annotated dialogues with 568 API calls. APIs include search engines, smart home control, health data management, and more.

The API-Bank workflow evaluates agents’ tool use capabilities across three levels:

- Level-1: Assess the ability to call APIs correctly and respond to results.

- Level-2: Evaluate the ability to find and use APIs.

- Level-3: Test planning capabilities for complex user requests.

Case Studies

Scientific Discovery Agent

ChemCrow (Bran et al., 2023) is a domain-specific example of an LLM with 13 expert-designed tools for organic synthesis, drug discovery, and materials design. The workflow combines ReAct, MRKLs, and CoT reasoning:

- LLM receives tool names, descriptions, and input/output details.

- It responds to prompts using the ReAct format: Thought, Action, Action Input, Observation.

Human evaluations revealed ChemCrow’s superior performance over GPT-4, especially in chemically complex domains, underscoring potential issues with LLM self-evaluation.

Boiko et al. (2023) explored LLM-driven agents for scientific discovery, using tools to browse the Internet, read documentation, execute code, call robotics experimentation APIs, and leverage other LLMs.

When tasked with “develop a novel anticancer drug,” the model followed steps like researching trends, selecting a target, and attempting synthesis.

They also examined risks, including illicit drug synthesis. A test set of chemical weapon agents showed a 36% acceptance rate for synthesis solutions, highlighting safety concerns.

Simulation of Generative Agents

Generative Agents (Park et al., 2023) is an experiment with 25 virtual characters in a sandbox environment, inspired by The Sims. These agents simulate human behavior for interactive applications.

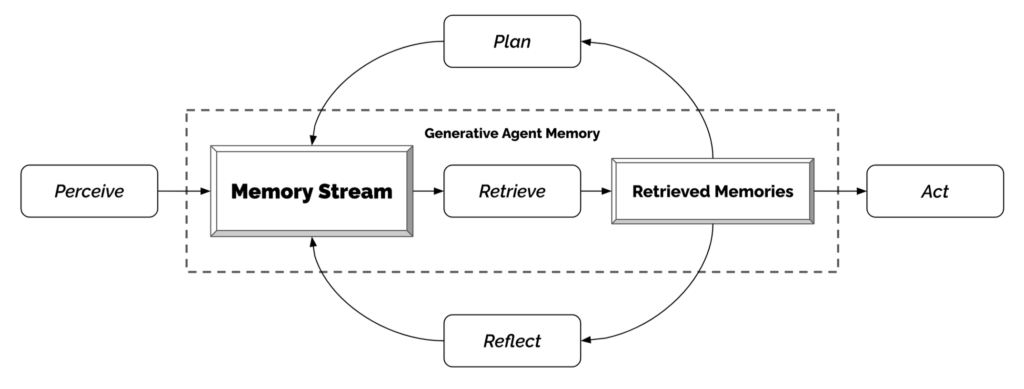

Generative agents use LLMs with memory, planning, and reflection mechanisms for realistic behavior:

- Memory Stream: Long-term memory records agents’ experiences.

- Retrieval Model: Determines context based on relevance, recency, and importance.

- Reflection Mechanism: Synthesizes memories into higher-level inferences.

- Planning & Reacting: Translates reflections into actions.

This simulation yields emergent social behavior, such as information diffusion, relationship memory, and social event coordination.

Proof-of-Concept Examples

AutoGPT has captured attention for its autonomous agent capabilities, despite reliability issues with natural language interfaces. Much of its code involves format parsing.

GPT-Engineer aims to create code repositories from natural language tasks, breaking them down into components and seeking user input for clarification.

Challenges

While exploring LLM-centric agents, several common challenges emerge:

- Finite Context Length: Limited context capacity restricts historical information, detailed instructions, and API call contexts. Systems must adapt to this constraint, although vector stores offer broader knowledge access. Longer context windows would enhance self-reflection and learning from past mistakes.

- Long-Term Planning and Task Breakdown: Planning over extensive histories and effectively exploring solutions remains challenging. LLMs struggle to adapt plans when faced with unexpected errors, limiting their robustness compared to humans who learn from trial and error.

- Reliability of Natural Language Interfaces: Current agent systems rely on natural language as an interface with memory and tools. However, model output reliability is uncertain, as LLMs may produce formatting errors and occasionally refuse instructions. Consequently, agent code often focuses on parsing model outputs.

This comprehensive exploration of LLM-driven autonomous agents highlights their potential and current limitations, underscoring the exciting possibilities they offer for various applications.

References:

[1] Wei et al. “Chain of thought prompting elicits reasoning in large language models.” NeurIPS 2022

[2] Yao et al. “Tree of Thoughts: Dliberate Problem Solving with Large Language Models.” arXiv preprint arXiv:2305.10601 (2023).

[3] Liu et al. “Chain of Hindsight Aligns Language Models with Feedback “ arXiv preprint arXiv:2302.02676 (2023).

[4] Liu et al. “LLM+P: Empowering Large Language Models with Optimal Planning Proficiency” arXiv preprint arXiv:2304.11477 (2023).

[5] Yao et al. “ReAct: Synergizing reasoning and acting in language models.” ICLR 2023.

[6] Google Blog. “Announcing ScaNN: Efficient Vector Similarity Search” July 28, 2020.

[7] https://chat.openai.com/share/46ff149e-a4c7-4dd7-a800-fc4a642ea389

[8] Shinn & Labash. “Reflexion: an autonomous agent with dynamic memory and self-reflection” arXiv preprint arXiv:2303.11366 (2023).

[9] Laskin et al. “In-context Reinforcement Learning with Algorithm Distillation” ICLR 2023.

[10] Karpas et al. “MRKL Systems A modular, neuro-symbolic architecture that combines large language models, external knowledge sources and discrete reasoning.” arXiv preprint arXiv:2205.00445 (2022).

[11] Nakano et al. “Webgpt: Browser-assisted question-answering with human feedback.” arXiv preprint arXiv:2112.09332 (2021).

[12] Parisi et al. “TALM: Tool Augmented Language Models”

[13] Schick et al. “Toolformer: Language Models Can Teach Themselves to Use Tools.” arXiv preprint arXiv:2302.04761 (2023).

[14] Weaviate Blog. Why is Vector Search so fast? Sep 13, 2022.

[15] Li et al. “API-Bank: A Benchmark for Tool-Augmented LLMs” arXiv preprint arXiv:2304.08244 (2023).

[16] Shen et al. “HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in HuggingFace” arXiv preprint arXiv:2303.17580 (2023).

[17] Bran et al. “ChemCrow: Augmenting large-language models with chemistry tools.” arXiv preprint arXiv:2304.05376 (2023).

[18] Boiko et al. “Emergent autonomous scientific research capabilities of large language models.” arXiv preprint arXiv:2304.05332 (2023).

[19] Joon Sung Park, et al. “Generative Agents: Interactive Simulacra of Human Behavior.” arXiv preprint arXiv:2304.03442 (2023).

[20] AutoGPT. https://github.com/Significant-Gravitas/Auto-GPT

[21] GPT-Engineer. https://github.com/AntonOsika/gpt-engineer

Leave a Reply

You must be logged in to post a comment.